This bug was initially raised by one of the developers on GCC Bugzilla

https://gcc.gnu.org/bugzilla/show_bug.cgi?id=97417

Where for a simple volatile load/store like:

#include <stdbool.h>

extern volatile bool active;

int foo(void)

{

if (!active) {

return 42;

} else {

return -42;

}

}

gcc will generate:

foo:

lui a5,%hi(active)

lbu a5,%lo(active)(a5)

li a0,42

andi a5,a5,0xff

beq a5,zero,.L1

li a0,-42

.L1:

ret

However, the definition of lbu from RISC-V spec mentioned:

” The LW instruction loads a 32-bit value from memory and sign-extends this to 64 bits before storing it in register rd for RV64I. The LWU instruction, on the other hand, zero-extends the 32-bit value from memory for RV64I. LH and LHU are defined analogously for 16-bit values, as are LB and LBU for 8-bit values. The SD, SW, SH, and SB instructions store 64-bit, 32-bit, 16-bit, and 8-bit values from the low bits of register rs2 to memory respectively. “

Therefore that “andi a5,a5,0xff” is obvious needless.

Jim replied:

Comparing with the ARM port, I see that in the ARM port, the movqi pattern emits

(insn 8 7 9 2 (set (reg:SI 117)

(zero_extend:SI (mem/v/c:QI (reg/f:SI 115) [1 active+0 S1 A8]))) “tmp.c\ “:7:7 -1

(nil))

(insn 9 8 10 2 (set (reg:QI 116)

(subreg:QI (reg:SI 117) 0)) “tmp.c”:7:7 -1

(nil))

and then later it combines the subreg operation with the following zero_extend and cancels them out.

Whereas in the RISC-V port, the movqi pattern emits

(insn 9 7 10 2 (set (reg:QI 76)

(mem/v/c:QI (lo_sum:DI (reg:DI 74)

(symbol_ref:DI (“active”) [flags 0xc4] <var_decl 0x7f9f0310312\ 0 active>)) [1 active+0 S1 A8])) “tmp.c”:7:7 -1

(nil))

and then combine refuses to combine the following zero-extend with this insn as the memory operation is volatile. So it seems we need to rewrite the RISC-V port to make movqi and movhi zero extend to si/di mode and then subreg. That probably will require cascading changes to avoid code size and performance regressions.

Looks like a tractable small to medium size project, but will need to wait for a volunteer to work on it.

So the solution looks simple, all we need is change how it moves in gcc/config/riscv/riscv.c

--- a/gcc/config/riscv/riscv.c

+++ b/gcc/config/riscv/riscv.c

@@ -1524,6 +1524,28 @@ riscv_legitimize_const_move (machine_mode mode, rtx dest, rtx src)

bool

riscv_legitimize_move (machine_mode mode, rtx dest, rtx src)

{

+ /* Expand

+ (set (reg:QI target) (mem:QI (address)))

+ to

+ (set (reg:DI temp) (zero_extend:DI (mem:QI (address))))

+ (set (reg:QI target) (subreg:QI (reg:DI temp) 0))

+ with auto-sign/zero extend. */

+ if (GET_MODE_CLASS (mode) == MODE_INT

+ && GET_MODE_SIZE (mode) < UNITS_PER_WORD

+ && can_create_pseudo_p ()

+ && MEM_P (src))

+ {

+ rtx temp_reg;

+ int zero_extend_p;

+

+ temp_reg = gen_reg_rtx (word_mode);

+ zero_extend_p = (LOAD_EXTEND_OP (mode) == ZERO_EXTEND);

+ emit_insn (gen_extend_insn (temp_reg, src, word_mode, mode,

+ zero_extend_p));

+ riscv_emit_move (dest, gen_lowpart (mode, temp_reg));

+ return true;

+ }

+

if (!register_operand (dest, mode) && !reg_or_0_operand (src, mode))

{

rtx reg;

now it appears that we successfully fix this issue, but some of the tests case with the -Os flag fails.

Given following program:

int load1r (int *array)

{

int a = 0;

a += array[200];

a += array[201];

a += array[202];

a += array[203];

return a;

}

with pass_shorten_memrefs defined in gcc/config/riscv/riscv-passes.def, when compiled with

gcc main.c -S -Os

supposedly we should get

load1r: addi a5,a0,768 lw a4,36(a5) lw a0,32(a5) addw a0,a0,a4 lw a4,40(a5) addw a4,a4,a0 lw a0,44(a5) addw a0,a0,a4 ret

the option -Os should be able to recognize that 4 sequential access to ram and make it in a form of Base+Offset (768+32) (768+36)… to reduce code size. But instead, we get:

load1r: lwu a4,800(a0) lwu a5,804(a0) addw a5,a5,a4 lwu a4,808(a0) lwu a0,812(a0) addw a5,a5,a4 addw a0,a5,a0 ret

So it looks like to me that shorten_memrefs pass fails to recognize the pattern with zero/sign extend, additional change to that pass needs to be done:

--- a/gcc/config/riscv/riscv-shorten-memrefs.c

+++ b/gcc/config/riscv/riscv-shorten-memrefs.c

@@ -75,12 +75,19 @@ private:

regno_map * analyze (basic_block bb);

void transform (regno_map *m, basic_block bb);

- bool get_si_mem_base_reg (rtx mem, rtx *addr);

+ bool get_si_mem_base_reg (rtx mem, rtx *addr, bool *extend);

}; // class pass_shorten_memrefs

bool

-pass_shorten_memrefs::get_si_mem_base_reg (rtx mem, rtx *addr)

+pass_shorten_memrefs::get_si_mem_base_reg (rtx mem, rtx *addr, bool *extend)

{

+ /* Whether it's sign/zero extended. */

+ if (GET_CODE (mem) == ZERO_EXTEND || GET_CODE (mem) == SIGN_EXTEND)

+ {

+ *extend = true;

+ mem = XEXP (mem, 0);

+ }

+

if (!MEM_P (mem) || GET_MODE (mem) != SImode)

return false;

*addr = XEXP (mem, 0);

@@ -110,7 +117,8 @@ pass_shorten_memrefs::analyze (basic_block bb)

{

rtx mem = XEXP (pat, i);

rtx addr;

- if (get_si_mem_base_reg (mem, &addr))

+ bool extend = false;

+ if (get_si_mem_base_reg (mem, &addr, &extend))

{

HOST_WIDE_INT regno = REGNO (XEXP (addr, 0));

/* Do not count store zero as these cannot be compressed. */

@@ -150,7 +158,8 @@ pass_shorten_memrefs::transform (regno_map *m, basic_block bb)

{

rtx mem = XEXP (pat, i);

rtx addr;

- if (get_si_mem_base_reg (mem, &addr))

+ bool extend = false;

+ if (get_si_mem_base_reg (mem, &addr, &extend))

{

HOST_WIDE_INT regno = REGNO (XEXP (addr, 0));

/* Do not transform store zero as these cannot be compressed. */

@@ -161,9 +170,20 @@ pass_shorten_memrefs::transform (regno_map *m, basic_block bb)

}

if (m->get_or_insert (regno) > 3)

{

- addr

- = targetm.legitimize_address (addr, addr, GET_MODE (mem));

- XEXP (pat, i) = replace_equiv_address (mem, addr);

+ if (extend)

+ {

+ addr

+ = targetm.legitimize_address (addr, addr,

+ GET_MODE (XEXP (mem, 0)));

+ XEXP (XEXP (pat, i), 0)

+ = replace_equiv_address (XEXP (mem, 0), addr);

+ }

+ else

+ {

+ addr = targetm.legitimize_address (addr, addr,

+ GET_MODE (mem));

+ XEXP (pat, i) = replace_equiv_address (mem, addr);

+ }

df_insn_rescan (insn);

}

}

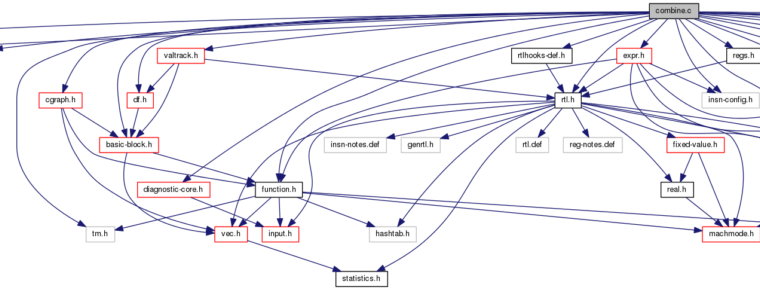

However there are still side effects in other passes of gcc backend. The combine pass refuses to do a 2-2 insn combine due to the extra zero/sign extend. My solution is to change that pass:

--- a/gcc/combine.c

+++ b/gcc/combine.c

@@ -2635,6 +2635,22 @@ is_just_move (rtx x)

return (GET_CODE (x) == SET && general_operand (SET_SRC (x), VOIDmode));

}

+/* Return whether X is just a single set, with the source

+ a sign/zero extended general_operand. */

+static bool

+is_just_extended_move (rtx x)

+{

+ if (INSN_P (x))

+ x = PATTERN (x);

+

+ if(GET_CODE (x) == SET)

+ if (GET_CODE (SET_SRC (x)) == ZERO_EXTEND

+ || GET_CODE (SET_SRC (x)) == SIGN_EXTEND)

+ return (general_operand ((XEXP (SET_SRC (x), 0)), VOIDmode));

+

+ return (GET_CODE (x) == SET && general_operand (SET_SRC (x), VOIDmode));

+}

+

/* Callback function to count autoincs. */

static int

@@ -3103,8 +3119,8 @@ try_combine (rtx_insn *i3, rtx_insn *i2, rtx_insn *i1, rtx_insn *i0,

}

/* Record whether i2 and i3 are trivial moves. */

- i2_was_move = is_just_move (i2);

- i3_was_move = is_just_move (i3);

+ i2_was_move = is_just_move (i2) || is_just_extended_move(i2);

+ i3_was_move = is_just_move (i3) || is_just_extended_move(i3);

/* Record whether I2DEST is used in I2SRC and similarly for the other

cases. Knowing this will help in register status updating below. */

But it will affect all other backend, So Jim proposed the solution:

The combine change is inconvenient. We can’t do that in stage3, and it means we need to make sure that this doesn’t break other targets.

If the combine change is a good idea, then I think you can just modify is_just_move rather than add a new function. Just skip over a ZERO_EXTEND or SIGN_EXTEND before the the general_operand check. We might need a mode check against UNITS_PER_WORD since extending past the word size is not necessarily a simple move.

So the problem stems from the code in combine that is willing to do a 2->2 split if neither original instruction is a simple move. When we add a SIGN_EXTEND or ZERO_EXTEND they aren’t considered simple moves anymore.

There is still the question of why the instruction cost allows the change. First I noticed that riscv_address_cost has a hook to check for shorten_memrefs but that riscv_rtx_costs isn’t calling it. It uses riscv_address_insns instead. So it seems like adding a shorten_memref check to the MEM case riscv_rtx_costs might solve the problem. But riscv_compressed_lw_address_p has a !reload_completed check, and combine runs before reload, so that returns the same result for both the new and old insns. I think that reload_completed check isn’t quite right. If we have a pseudo-reg, then we should allow that, but if we have an offset that is clearly not compressible, then we should reject it before reload. So I think the reload_completed check should be moved down to where it checks for a compressible register. With those two changes, I can make the testcase work without a combine change. Though since I changed how shorten_memrefs works we need a check to make sure this patch doens’t change code size. I haven’t tried to do that yet.

With my changes, in the combine dump, I see Successfully matched this instruction:

(set (reg/f:DI 92)

(plus:DI (reg:DI 96)

(const_int 768 [0x300])))

Successfully matched this instruction:

(set (reg:DI 82 [ MEM[(intD.1 *)array_5(D) + 800B] ])

(sign_extend:DI (mem:SI (plus:DI (reg:DI 96)

(const_int 800 [0x320])) [1 MEM[(intD.1 *)array_5(D) + 800B]+0 S4 A32])))

rejecting combination of insns 27 and 6 original costs 4 + 16 = 20 replacement costs 4 + 20 = 24

So Jim’s change:

--- a/gcc/config/riscv/riscv.c

+++ b/gcc/config/riscv/riscv.c

@@ -891,17 +891,13 @@ riscv_compressed_lw_address_p (rtx x)

bool result = riscv_classify_address (&addr, x, GET_MODE (x),

reload_completed);

- /* Before reload, assuming all load/stores of valid addresses get compressed

- gives better code size than checking if the address is reg + small_offset

- early on. */

- if (result && !reload_completed)

- return true;

-

/* Return false if address is not compressed_reg + small_offset. */

if (!result

|| addr.type != ADDRESS_REG

- || (!riscv_compressed_reg_p (REGNO (addr.reg))

- && addr.reg != stack_pointer_rtx)

+ /* Before reload, assume all registers are OK. */

+ || (reload_completed

+ && !riscv_compressed_reg_p (REGNO (addr.reg))

+ && addr.reg != stack_pointer_rtx)

|| !riscv_compressed_lw_offset_p (addr.offset))

return false;

@@ -1708,6 +1704,13 @@ riscv_rtx_costs (rtx x, machine_mode mode, int outer_code, int opno ATTRIBUTE_UN

instructions it needs. */

if ((cost = riscv_address_insns (XEXP (x, 0), mode, true)) > 0)

{

+ /* When optimizing for size, make uncompressible 32-bit addresses

+ more expensive so that compressible 32-bit addresses are

+ preferred. */

+ if (TARGET_RVC && !speed && riscv_mshorten_memrefs && mode == SImode

+ && !riscv_compressed_lw_address_p (XEXP (x, 0)))

+ cost++;

+

*total = COSTS_N_INSNS (cost + tune_param->memory_cost);

return true;

}

Here are 2 final commits to gcc master branch:

https://gcc.gnu.org/git/?p=gcc.git;a=commit;h=a4953810bac524e19126a2745c75fed58db962c2

https://gcc.gnu.org/git/gitweb.cgi?p=gcc.git;h=18fabc35f47f0abf4ec14d147098ec4e0734d2a3