This patch has been committed to the master branch:

https://gcc.gnu.org/pipermail/gcc-patches/2021-April/569135.html

https://gcc.gnu.org/git/?p=gcc.git;a=commit;h=6efd040c301b06fae51657c8370ad940c5c3d513

Learn more about Built-in Functions about Performing Arithmetic with Overflow Checking:

https://gcc.gnu.org/onlinedocs/gcc/Integer-Overflow-Builtins.html

Since these functions are pervasively used in the kernel, driver, etc. Adding patterns to the RISC-V backend should allow us to have some performance elevation.

Some example:

https://lwn.net/Articles/623368/

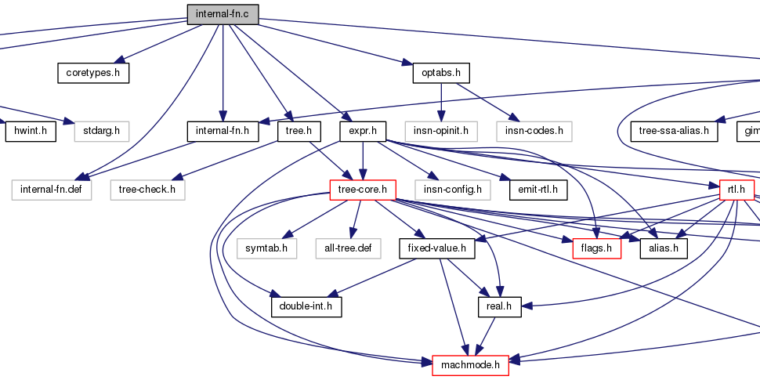

Let’s start with internal arithmetic functions handling in middle-end gimple:

gcc/internal-fn.cstatic void

expand_arith_overflow (enum tree_code code, gimple *stmt)

{

tree lhs = gimple_call_lhs (stmt);

if (lhs == NULL_TREE)

return;

tree arg0 = gimple_call_arg (stmt, 0);

tree arg1 = gimple_call_arg (stmt, 1);

tree type = TREE_TYPE (TREE_TYPE (lhs));

int uns0_p = TYPE_UNSIGNED (TREE_TYPE (arg0));

int uns1_p = TYPE_UNSIGNED (TREE_TYPE (arg1));

int unsr_p = TYPE_UNSIGNED (type);

int prec0 = TYPE_PRECISION (TREE_TYPE (arg0));

int prec1 = TYPE_PRECISION (TREE_TYPE (arg1));

int precres = TYPE_PRECISION (type);

location_t loc = gimple_location (stmt);

if (!uns0_p && get_range_pos_neg (arg0) == 1)

uns0_p = true;

if (!uns1_p && get_range_pos_neg (arg1) == 1)

uns1_p = true;

int pr = get_min_precision (arg0, uns0_p ? UNSIGNED : SIGNED);

prec0 = MIN (prec0, pr);

pr = get_min_precision (arg1, uns1_p ? UNSIGNED : SIGNED);

prec1 = MIN (prec1, pr);

/* If uns0_p && uns1_p, precop is minimum needed precision

of unsigned type to hold the exact result, otherwise

precop is minimum needed precision of signed type to

hold the exact result. */

int precop;

if (code == MULT_EXPR)

precop = prec0 + prec1 + (uns0_p != uns1_p);

else

{

if (uns0_p == uns1_p)

precop = MAX (prec0, prec1) + 1;

else if (uns0_p)

precop = MAX (prec0 + 1, prec1) + 1;

else

precop = MAX (prec0, prec1 + 1) + 1;

}

int orig_precres = precres;

do

{

if ((uns0_p && uns1_p)

? ((precop + !unsr_p) <= precres

/* u1 - u2 -> ur can overflow, no matter what precision

the result has. */

&& (code != MINUS_EXPR || !unsr_p))

: (!unsr_p && precop <= precres))

{

/* The infinity precision result will always fit into result. */

rtx target = expand_expr (lhs, NULL_RTX, VOIDmode, EXPAND_WRITE);

write_complex_part (target, const0_rtx, true);

scalar_int_mode mode = SCALAR_INT_TYPE_MODE (type);

struct separate_ops ops;

ops.code = code;

ops.type = type;

ops.op0 = fold_convert_loc (loc, type, arg0);

ops.op1 = fold_convert_loc (loc, type, arg1);

ops.op2 = NULL_TREE;

ops.location = loc;

rtx tem = expand_expr_real_2 (&ops, NULL_RTX, mode, EXPAND_NORMAL);

expand_arith_overflow_result_store (lhs, target, mode, tem);

return;

}

/* For operations with low precision, if target doesn't have them, start

with precres widening right away, otherwise do it only if the most

simple cases can't be used. */

const int min_precision = targetm.min_arithmetic_precision ();

if (orig_precres == precres && precres < min_precision)

;

else if ((uns0_p && uns1_p && unsr_p && prec0 <= precres

&& prec1 <= precres)

|| ((!uns0_p || !uns1_p) && !unsr_p

&& prec0 + uns0_p <= precres

&& prec1 + uns1_p <= precres))

{

arg0 = fold_convert_loc (loc, type, arg0);

arg1 = fold_convert_loc (loc, type, arg1);

switch (code)

{

case MINUS_EXPR:

if (integer_zerop (arg0) && !unsr_p)

{

expand_neg_overflow (loc, lhs, arg1, false, NULL);

return;

}

/* FALLTHRU */

case PLUS_EXPR:

expand_addsub_overflow (loc, code, lhs, arg0, arg1, unsr_p,

unsr_p, unsr_p, false, NULL);

return;

case MULT_EXPR:

expand_mul_overflow (loc, lhs, arg0, arg1, unsr_p,

unsr_p, unsr_p, false, NULL);

return;

default:

gcc_unreachable ();

}

}

/* MORE BELOW BUT LET'S JUST IGNORE THEM FOR NOW */

......

}

RISC-V didn’t implement the targetm.min_arithmetic_precision, by cross-referencing sparc, I added:

diff --git a/gcc/config/riscv/riscv.c b/gcc/config/riscv/riscv.c

index d489717b2a5..cf94f5c9658 100644

--- a/gcc/config/riscv/riscv.c

+++ b/gcc/config/riscv/riscv.c

@@ -351,6 +351,14 @@ static const struct riscv_tune_info riscv_tune_info_table[] = {

{ "size", generic, &optimize_size_tune_info },

};

+/* Implement TARGET_MIN_ARITHMETIC_PRECISION. */

+

+static unsigned int

+riscv_min_arithmetic_precision (void)

+{

+ return 32;

+}

+

/* Return the riscv_tune_info entry for the given name string. */

static const struct riscv_tune_info *

diff --git a/gcc/config/riscv/riscv.h b/gcc/config/riscv/riscv.h

index 172c7ca7c98..0521c8881ae 100644

--- a/gcc/config/riscv/riscv.h

+++ b/gcc/config/riscv/riscv.h

@@ -121,6 +121,10 @@ extern const char *riscv_default_mtune (int argc, const char **argv);

#define MIN_UNITS_PER_WORD 4

#endif

+/* Allows SImode op in builtin overflow pattern, see internal-fn.c. */

+#undef TARGET_MIN_ARITHMETIC_PRECISION

+#define TARGET_MIN_ARITHMETIC_PRECISION riscv_min_arithmetic_precision

+

/* The `Q' extension is not yet supported. */

#define UNITS_PER_FP_REG (TARGET_DOUBLE_FLOAT ? 8 : 4)

This allows *.w instruction to be used under RV64 when 32bit operands are involved.

Then the overflowing pattern is handled in the following functions:

expand_addsub_overflow (location_t loc, tree_code code, tree lhs,

tree arg0, tree arg1, bool unsr_p, bool uns0_p,

bool uns1_p, bool is_ubsan, tree *datap)- code: The expression

- arg0: op0

- arg1: op1

- unsr_p: boolean value, whether if result is unsigned.

- uns0_p: boolean value, whether if op0 is unsigned.

- uns1_p: boolean value, whether if op1 is unsigned.

Use add/sub overflow as an example,

......

/* u1 +- u2 -> ur */

if (uns0_p && uns1_p && unsr_p)

{

insn_code icode = optab_handler (code == PLUS_EXPR ? uaddv4_optab

: usubv4_optab, mode);

......

/* s1 +- s2 -> sr */

do_signed:

{

insn_code icode = optab_handler (code == PLUS_EXPR ? addv4_optab

: subv4_optab, mode);

......

if both arg0 arg1 and result types are unsigned, it falls into (u)add/usub4_optab, depends on its symbol, add/subv_optab otherwise.

These optabs are defined in:

gcc/optabs.def... OPTAB_D (addv4_optab, "addv$I$a4") OPTAB_D (subv4_optab, "subv$I$a4") OPTAB_D (mulv4_optab, "mulv$I$a4") OPTAB_D (uaddv4_optab, "uaddv$I$a4") OPTAB_D (usubv4_optab, "usubv$I$a4") OPTAB_D (umulv4_optab, "umulv$I$a4") ...

Then you’re free to add those patterns in:

gcc/config/riscv/riscv.mdFor addition:

signed addition (SImode in RV32 || DImode in RV64):

add t0, t1, t2

slti t3, t2, 0

slt t4, t0, t1

bne t3, t4, overflow

signed addition (SImode in RV64):

add t0, t1, t2

addw t3, t1, t2

bne t0, t3, overflow

(define_expand "addv<mode>4"

[(set (match_operand:GPR 0 "register_operand" "=r,r")

(plus:GPR (match_operand:GPR 1 "register_operand" " r,r")

(match_operand:GPR 2 "arith_operand" " r,I")))

(label_ref (match_operand 3 "" ""))]

""

{

if (TARGET_64BIT && <MODE>mode == SImode)

{

rtx t3 = gen_reg_rtx (DImode);

rtx t4 = gen_reg_rtx (DImode);

rtx t5 = gen_reg_rtx (DImode);

rtx t6 = gen_reg_rtx (DImode);

emit_insn (gen_addsi3 (operands[0], operands[1], operands[2]));

if (GET_CODE (operands[1]) != CONST_INT)

emit_insn (gen_extend_insn (t4, operands[1], DImode, SImode, 0));

else

t4 = operands[1];

if (GET_CODE (operands[2]) != CONST_INT)

emit_insn (gen_extend_insn (t5, operands[2], DImode, SImode, 0));

else

t5 = operands[2];

emit_insn (gen_adddi3 (t3, t4, t5));

emit_insn (gen_extend_insn (t6, operands[0], DImode, SImode, 0));

riscv_expand_conditional_branch (operands[3], NE, t6, t3);

}

else

{

rtx t3 = gen_reg_rtx (<MODE>mode);

rtx t4 = gen_reg_rtx (<MODE>mode);

emit_insn (gen_add3_insn (operands[0], operands[1], operands[2]));

rtx cmp1 = gen_rtx_LT (<MODE>mode, operands[2], const0_rtx);

emit_insn (gen_cstore<mode>4 (t3, cmp1, operands[2], const0_rtx));

rtx cmp2 = gen_rtx_LT (<MODE>mode, operands[0], operands[1]);

emit_insn (gen_cstore<mode>4 (t4, cmp2, operands[0], operands[1]));

riscv_expand_conditional_branch (operands[3], NE, t3, t4);

}

DONE;

})

unsigned addition (SImode in RV32 || DImode in RV64):

add t0, t1, t2

bltu t0, t1, overflow

unsigned addition (SImode in RV64):

sext.w t3, t1

addw t0, t1, t2

bltu t0, t3, overflow

(define_expand "uaddv<mode>4"

[(set (match_operand:GPR 0 "register_operand" "=r,r")

(plus:GPR (match_operand:GPR 1 "register_operand" " r,r")

(match_operand:GPR 2 "arith_operand" " r,I")))

(label_ref (match_operand 3 "" ""))]

""

{

if (TARGET_64BIT && <MODE>mode == SImode)

{

rtx t3 = gen_reg_rtx (DImode);

rtx t4 = gen_reg_rtx (DImode);

if (GET_CODE (operands[1]) != CONST_INT)

emit_insn (gen_extend_insn (t3, operands[1], DImode, SImode, 0));

else

t3 = operands[1];

emit_insn (gen_addsi3 (operands[0], operands[1], operands[2]));

emit_insn (gen_extend_insn (t4, operands[0], DImode, SImode, 0));

riscv_expand_conditional_branch (operands[3], LTU, t4, t3);

}

else

{

emit_insn (gen_add3_insn (operands[0], operands[1], operands[2]));

riscv_expand_conditional_branch (operands[3], LTU, operands[0],

operands[1]);

}

DONE;

})

For subtraction:

signed subtraction (SImode in RV32 || DImode in RV64):

sub t0, t1, t2

slti t3, t2, 0

slt t4, t1, t0

bne t3, t4, overflow

signed subtraction (SImode in RV64):

sub t0, t1, t2

subw t3, t1, t2

bne t0, t3, overflow

(define_expand "subv<mode>4"

[(set (match_operand:GPR 0 "register_operand" "= r")

(minus:GPR (match_operand:GPR 1 "reg_or_0_operand" " rJ")

(match_operand:GPR 2 "register_operand" " r")))

(label_ref (match_operand 3 "" ""))]

""

{

if (TARGET_64BIT && <MODE>mode == SImode)

{

rtx t3 = gen_reg_rtx (DImode);

rtx t4 = gen_reg_rtx (DImode);

rtx t5 = gen_reg_rtx (DImode);

rtx t6 = gen_reg_rtx (DImode);

emit_insn (gen_subsi3 (operands[0], operands[1], operands[2]));

if (GET_CODE (operands[1]) != CONST_INT)

emit_insn (gen_extend_insn (t4, operands[1], DImode, SImode, 0));

else

t4 = operands[1];

if (GET_CODE (operands[2]) != CONST_INT)

emit_insn (gen_extend_insn (t5, operands[2], DImode, SImode, 0));

else

t5 = operands[2];

emit_insn (gen_subdi3 (t3, t4, t5));

emit_insn (gen_extend_insn (t6, operands[0], DImode, SImode, 0));

riscv_expand_conditional_branch (operands[3], NE, t6, t3);

}

else

{

rtx t3 = gen_reg_rtx (<MODE>mode);

rtx t4 = gen_reg_rtx (<MODE>mode);

emit_insn (gen_sub3_insn (operands[0], operands[1], operands[2]));

rtx cmp1 = gen_rtx_LT (<MODE>mode, operands[2], const0_rtx);

emit_insn (gen_cstore<mode>4 (t3, cmp1, operands[2], const0_rtx));

rtx cmp2 = gen_rtx_LT (<MODE>mode, operands[1], operands[0]);

emit_insn (gen_cstore<mode>4 (t4, cmp2, operands[1], operands[0]));

riscv_expand_conditional_branch (operands[3], NE, t3, t4);

}

DONE;

})

unsigned subtraction (SImode in RV32 || DImode in RV64):

add t0, t1, t2

bltu t1, t0, overflow

unsigned subtraction (SImode in RV64):

sext.w t3, t1

subw t0, t1, t2

bltu t0, t3, overflow

(define_expand "usubv<mode>4"

[(set (match_operand:GPR 0 "register_operand" "= r")

(minus:GPR (match_operand:GPR 1 "reg_or_0_operand" " rJ")

(match_operand:GPR 2 "register_operand" " r")))

(label_ref (match_operand 3 "" ""))]

""

{

if (TARGET_64BIT && <MODE>mode == SImode)

{

rtx t3 = gen_reg_rtx (DImode);

rtx t4 = gen_reg_rtx (DImode);

if (GET_CODE (operands[1]) != CONST_INT)

emit_insn (gen_extend_insn (t3, operands[1], DImode, SImode, 0));

else

t3 = operands[1];

emit_insn (gen_subsi3 (operands[0], operands[1], operands[2]));

emit_insn (gen_extend_insn (t4, operands[0], DImode, SImode, 0));

riscv_expand_conditional_branch (operands[3], LTU, t3, t4);

}

else

{

emit_insn (gen_sub3_insn (operands[0], operands[1], operands[2]));

riscv_expand_conditional_branch (operands[3], LTU, operands[1],

operands[0]);

}

DONE;

})

For Multiplication:

signed multiplication (SImode in RV32 || DImode in RV64):

mulh t3, t1, t2

mul t0, t1, t2

srai t4, t0, 31/63 (RV32/64)

bne t3, t4, overflow

signed multiplication (SImode in RV64):

mul t0, t1, t2

sext.w t3, t0

bne t0, t3, overflow

(define_expand "mulv<mode>4"

[(set (match_operand:GPR 0 "register_operand" "=r")

(mult:GPR (match_operand:GPR 1 "register_operand" " r")

(match_operand:GPR 2 "register_operand" " r")))

(label_ref (match_operand 3 "" ""))]

"TARGET_MUL"

{

if (TARGET_64BIT && <MODE>mode == SImode)

{

rtx t3 = gen_reg_rtx (DImode);

rtx t4 = gen_reg_rtx (DImode);

rtx t5 = gen_reg_rtx (DImode);

rtx t6 = gen_reg_rtx (DImode);

if (GET_CODE (operands[1]) != CONST_INT)

emit_insn (gen_extend_insn (t4, operands[1], DImode, SImode, 0));

else

t4 = operands[1];

if (GET_CODE (operands[2]) != CONST_INT)

emit_insn (gen_extend_insn (t5, operands[2], DImode, SImode, 0));

else

t5 = operands[2];

emit_insn (gen_muldi3 (t3, t4, t5));

emit_move_insn (operands[0], gen_lowpart (SImode, t3));

emit_insn (gen_extend_insn (t6, operands[0], DImode, SImode, 0));

riscv_expand_conditional_branch (operands[3], NE, t6, t3);

}

else

{

rtx hp = gen_reg_rtx (<MODE>mode);

rtx lp = gen_reg_rtx (<MODE>mode);

emit_insn (gen_mul<mode>3_highpart (hp, operands[1], operands[2]));

emit_insn (gen_mul<mode>3 (operands[0], operands[1], operands[2]));

emit_insn (gen_ashr<mode>3 (lp, operands[0],

GEN_INT (BITS_PER_WORD - 1)));

riscv_expand_conditional_branch (operands[3], NE, hp, lp);

}

DONE;

})

unsigned multiplication (SImode in RV32 || DImode in RV64 ):

mulhu t3, t1, t2

mul t0, t1, t2

bne t3, 0, overflow

unsigned multiplication (SImode in RV64):

slli t0, t0, 32

slli t1, t1, 32

mulhu t2, t1, t1

srli t3, t2, 32

bne t3, 0, overflow

sext.w t2, t2

(define_expand "umulv<mode>4"

[(set (match_operand:GPR 0 "register_operand" "=r")

(mult:GPR (match_operand:GPR 1 "register_operand" " r")

(match_operand:GPR 2 "register_operand" " r")))

(label_ref (match_operand 3 "" ""))]

"TARGET_MUL"

{

if (TARGET_64BIT && <MODE>mode == SImode)

{

rtx t3 = gen_reg_rtx (DImode);

rtx t4 = gen_reg_rtx (DImode);

rtx t5 = gen_reg_rtx (DImode);

rtx t6 = gen_reg_rtx (DImode);

rtx t7 = gen_reg_rtx (DImode);

rtx t8 = gen_reg_rtx (DImode);

if (GET_CODE (operands[1]) != CONST_INT)

emit_insn (gen_extend_insn (t3, operands[1], DImode, SImode, 0));

else

t3 = operands[1];

if (GET_CODE (operands[2]) != CONST_INT)

emit_insn (gen_extend_insn (t4, operands[2], DImode, SImode, 0));

else

t4 = operands[2];

emit_insn (gen_ashldi3 (t5, t3, GEN_INT (32)));

emit_insn (gen_ashldi3 (t6, t4, GEN_INT (32)));

emit_insn (gen_umuldi3_highpart (t7, t5, t6));

emit_move_insn (operands[0], gen_lowpart (SImode, t7));

emit_insn (gen_lshrdi3 (t8, t7, GEN_INT (32)));

riscv_expand_conditional_branch (operands[3], NE, t8, const0_rtx);

}

else

{

rtx hp = gen_reg_rtx (<MODE>mode);

emit_insn (gen_umul<mode>3_highpart (hp, operands[1], operands[2]));

emit_insn (gen_mul<mode>3 (operands[0], operands[1], operands[2]));

riscv_expand_conditional_branch (operands[3], NE, hp, const0_rtx);

}

DONE;

})

Some test cases for reference (Written by Jim Wilson)

#include <stdlib.h>

int

sub_add_p (int i, int j)

{

int k;

return __builtin_add_overflow_p (i, j, k);

}

int

sub_sub_p (int i, int j)

{

int k;

return __builtin_sub_overflow_p (i, j, k);

}

int

sub_mul_p (int i, int j)

{

int k;

return __builtin_mul_overflow_p (i, j, k);

}

long

sub_add_p_long (long i, long j)

{

long k;

return __builtin_add_overflow_p (i, j, k);

}

long

sub_sub_p_long (long i, long j)

{

long k;

return __builtin_sub_overflow_p (i, j, k);

}

long

sub_mul_p_long (long i, long j)

{

long k;

return __builtin_mul_overflow_p (i, j, k);

}

int

sub_add (int i, int j)

{

int k;

if (__builtin_sadd_overflow (i, j, &k))

abort ();

return k;

}

int

sub_sub (int i, int j)

{

int k;

if (__builtin_ssub_overflow (i, j, &k))

abort ();

return k;

}

int

sub_mul (int i, int j)

{

int k;

if (__builtin_smul_overflow (i, j, &k))

abort ();

return k;

}

int

sub_uadd (int i, int j)

{

int k;

if (__builtin_uadd_overflow (i, j, &k))

abort ();

return k;

}

int

sub_usub (int i, int j)

{

int k;

if (__builtin_usub_overflow (i, j, &k))

abort ();

return k;

}

int

sub_umul (int i, int j)

{

int k;

if (__builtin_umul_overflow (i, j, &k))

abort ();

return k;

}

long

sub_add_long (long i, long j)

{

long k;

if (__builtin_saddl_overflow (i, j, &k))

abort ();

return k;

}

long

sub_sub_long (long i, long j)

{

long k;

if (__builtin_ssubl_overflow (i, j, &k))

abort ();

return k;

}

long

sub_mul_long (long i, long j)

{

long k;

if (__builtin_smull_overflow (i, j, &k))

abort ();

return k;

}

long

sub_uadd_long (long i, long j)

{

long k;

if (__builtin_uaddl_overflow (i, j, &k))

abort ();

return k;

}

long

sub_usub_long (long i, long j)

{

long k;

if (__builtin_usubl_overflow (i, j, &k))

abort ();

return k;

}

long

sub_umul_long (long i, long j)

{

long k;

if (__builtin_umull_overflow (i, j, &k))

abort ();

return k;

}

Whole patch:

From 6efd040c301b06fae51657c8370ad940c5c3d513 Mon Sep 17 00:00:00 2001 From: LevyHsu <[email protected]> Date: Thu, 29 Apr 2021 13:42:04 +0800 Subject: [PATCH] RISC-V: Add patterns for builtin overflow. gcc/ * config/riscv/riscv.c (riscv_min_arithmetic_precision): New. * config/riscv/riscv.h (TARGET_MIN_ARITHMETIC_PRECISION): New. * config/riscv/riscv.md (addv<mode>4, uaddv<mode>4): New. (subv<mode>4, usubv<mode>4, mulv<mode>4, umulv<mode>4): New. --- gcc/config/riscv/riscv.c | 8 ++ gcc/config/riscv/riscv.h | 4 + gcc/config/riscv/riscv.md | 245 ++++++++++++++++++++++++++++++++++++++ 3 files changed, 257 insertions(+) diff --git a/gcc/config/riscv/riscv.c b/gcc/config/riscv/riscv.c index 17cdf705c32..e1064e374eb 100644 --- a/gcc/config/riscv/riscv.c +++ b/gcc/config/riscv/riscv.c @@ -351,6 +351,14 @@ static const struct riscv_tune_info riscv_tune_info_table[] = { { "size", generic, &optimize_size_tune_info }, }; +/* Implement TARGET_MIN_ARITHMETIC_PRECISION. */ + +static unsigned int +riscv_min_arithmetic_precision (void) +{ + return 32; +} + /* Return the riscv_tune_info entry for the given name string. */ static const struct riscv_tune_info * diff --git a/gcc/config/riscv/riscv.h b/gcc/config/riscv/riscv.h index d17096e1dfa..f3e85723c85 100644 --- a/gcc/config/riscv/riscv.h +++ b/gcc/config/riscv/riscv.h @@ -146,6 +146,10 @@ ASM_MISA_SPEC #define MIN_UNITS_PER_WORD 4 #endif +/* Allows SImode op in builtin overflow pattern, see internal-fn.c. */ +#undef TARGET_MIN_ARITHMETIC_PRECISION +#define TARGET_MIN_ARITHMETIC_PRECISION riscv_min_arithmetic_precision + /* The `Q' extension is not yet supported. */ #define UNITS_PER_FP_REG (TARGET_DOUBLE_FLOAT ? 8 : 4) diff --git a/gcc/config/riscv/riscv.md b/gcc/config/riscv/riscv.md index c3687d57047..0e35960fefa 100644 --- a/gcc/config/riscv/riscv.md +++ b/gcc/config/riscv/riscv.md @@ -467,6 +467,81 @@ [(set_attr "type" "arith") (set_attr "mode" "DI")]) +(define_expand "addv<mode>4" + [(set (match_operand:GPR 0 "register_operand" "=r,r") + (plus:GPR (match_operand:GPR 1 "register_operand" " r,r") + (match_operand:GPR 2 "arith_operand" " r,I"))) + (label_ref (match_operand 3 "" ""))] + "" +{ + if (TARGET_64BIT && <MODE>mode == SImode) + { + rtx t3 = gen_reg_rtx (DImode); + rtx t4 = gen_reg_rtx (DImode); + rtx t5 = gen_reg_rtx (DImode); + rtx t6 = gen_reg_rtx (DImode); + + emit_insn (gen_addsi3 (operands[0], operands[1], operands[2])); + if (GET_CODE (operands[1]) != CONST_INT) + emit_insn (gen_extend_insn (t4, operands[1], DImode, SImode, 0)); + else + t4 = operands[1]; + if (GET_CODE (operands[2]) != CONST_INT) + emit_insn (gen_extend_insn (t5, operands[2], DImode, SImode, 0)); + else + t5 = operands[2]; + emit_insn (gen_adddi3 (t3, t4, t5)); + emit_insn (gen_extend_insn (t6, operands[0], DImode, SImode, 0)); + + riscv_expand_conditional_branch (operands[3], NE, t6, t3); + } + else + { + rtx t3 = gen_reg_rtx (<MODE>mode); + rtx t4 = gen_reg_rtx (<MODE>mode); + + emit_insn (gen_add3_insn (operands[0], operands[1], operands[2])); + rtx cmp1 = gen_rtx_LT (<MODE>mode, operands[2], const0_rtx); + emit_insn (gen_cstore<mode>4 (t3, cmp1, operands[2], const0_rtx)); + rtx cmp2 = gen_rtx_LT (<MODE>mode, operands[0], operands[1]); + + emit_insn (gen_cstore<mode>4 (t4, cmp2, operands[0], operands[1])); + riscv_expand_conditional_branch (operands[3], NE, t3, t4); + } + DONE; +}) + +(define_expand "uaddv<mode>4" + [(set (match_operand:GPR 0 "register_operand" "=r,r") + (plus:GPR (match_operand:GPR 1 "register_operand" " r,r") + (match_operand:GPR 2 "arith_operand" " r,I"))) + (label_ref (match_operand 3 "" ""))] + "" +{ + if (TARGET_64BIT && <MODE>mode == SImode) + { + rtx t3 = gen_reg_rtx (DImode); + rtx t4 = gen_reg_rtx (DImode); + + if (GET_CODE (operands[1]) != CONST_INT) + emit_insn (gen_extend_insn (t3, operands[1], DImode, SImode, 0)); + else + t3 = operands[1]; + emit_insn (gen_addsi3 (operands[0], operands[1], operands[2])); + emit_insn (gen_extend_insn (t4, operands[0], DImode, SImode, 0)); + + riscv_expand_conditional_branch (operands[3], LTU, t4, t3); + } + else + { + emit_insn (gen_add3_insn (operands[0], operands[1], operands[2])); + riscv_expand_conditional_branch (operands[3], LTU, operands[0], + operands[1]); + } + + DONE; +}) + (define_insn "*addsi3_extended" [(set (match_operand:DI 0 "register_operand" "=r,r") (sign_extend:DI @@ -523,6 +598,85 @@ [(set_attr "type" "arith") (set_attr "mode" "SI")]) +(define_expand "subv<mode>4" + [(set (match_operand:GPR 0 "register_operand" "= r") + (minus:GPR (match_operand:GPR 1 "reg_or_0_operand" " rJ") + (match_operand:GPR 2 "register_operand" " r"))) + (label_ref (match_operand 3 "" ""))] + "" +{ + if (TARGET_64BIT && <MODE>mode == SImode) + { + rtx t3 = gen_reg_rtx (DImode); + rtx t4 = gen_reg_rtx (DImode); + rtx t5 = gen_reg_rtx (DImode); + rtx t6 = gen_reg_rtx (DImode); + + emit_insn (gen_subsi3 (operands[0], operands[1], operands[2])); + if (GET_CODE (operands[1]) != CONST_INT) + emit_insn (gen_extend_insn (t4, operands[1], DImode, SImode, 0)); + else + t4 = operands[1]; + if (GET_CODE (operands[2]) != CONST_INT) + emit_insn (gen_extend_insn (t5, operands[2], DImode, SImode, 0)); + else + t5 = operands[2]; + emit_insn (gen_subdi3 (t3, t4, t5)); + emit_insn (gen_extend_insn (t6, operands[0], DImode, SImode, 0)); + + riscv_expand_conditional_branch (operands[3], NE, t6, t3); + } + else + { + rtx t3 = gen_reg_rtx (<MODE>mode); + rtx t4 = gen_reg_rtx (<MODE>mode); + + emit_insn (gen_sub3_insn (operands[0], operands[1], operands[2])); + + rtx cmp1 = gen_rtx_LT (<MODE>mode, operands[2], const0_rtx); + emit_insn (gen_cstore<mode>4 (t3, cmp1, operands[2], const0_rtx)); + + rtx cmp2 = gen_rtx_LT (<MODE>mode, operands[1], operands[0]); + emit_insn (gen_cstore<mode>4 (t4, cmp2, operands[1], operands[0])); + + riscv_expand_conditional_branch (operands[3], NE, t3, t4); + } + + DONE; +}) + +(define_expand "usubv<mode>4" + [(set (match_operand:GPR 0 "register_operand" "= r") + (minus:GPR (match_operand:GPR 1 "reg_or_0_operand" " rJ") + (match_operand:GPR 2 "register_operand" " r"))) + (label_ref (match_operand 3 "" ""))] + "" +{ + if (TARGET_64BIT && <MODE>mode == SImode) + { + rtx t3 = gen_reg_rtx (DImode); + rtx t4 = gen_reg_rtx (DImode); + + if (GET_CODE (operands[1]) != CONST_INT) + emit_insn (gen_extend_insn (t3, operands[1], DImode, SImode, 0)); + else + t3 = operands[1]; + emit_insn (gen_subsi3 (operands[0], operands[1], operands[2])); + emit_insn (gen_extend_insn (t4, operands[0], DImode, SImode, 0)); + + riscv_expand_conditional_branch (operands[3], LTU, t3, t4); + } + else + { + emit_insn (gen_sub3_insn (operands[0], operands[1], operands[2])); + riscv_expand_conditional_branch (operands[3], LTU, operands[1], + operands[0]); + } + + DONE; +}) + + (define_insn "*subsi3_extended" [(set (match_operand:DI 0 "register_operand" "= r") (sign_extend:DI @@ -614,6 +768,97 @@ [(set_attr "type" "imul") (set_attr "mode" "DI")]) +(define_expand "mulv<mode>4" + [(set (match_operand:GPR 0 "register_operand" "=r") + (mult:GPR (match_operand:GPR 1 "register_operand" " r") + (match_operand:GPR 2 "register_operand" " r"))) + (label_ref (match_operand 3 "" ""))] + "TARGET_MUL" +{ + if (TARGET_64BIT && <MODE>mode == SImode) + { + rtx t3 = gen_reg_rtx (DImode); + rtx t4 = gen_reg_rtx (DImode); + rtx t5 = gen_reg_rtx (DImode); + rtx t6 = gen_reg_rtx (DImode); + + if (GET_CODE (operands[1]) != CONST_INT) + emit_insn (gen_extend_insn (t4, operands[1], DImode, SImode, 0)); + else + t4 = operands[1]; + if (GET_CODE (operands[2]) != CONST_INT) + emit_insn (gen_extend_insn (t5, operands[2], DImode, SImode, 0)); + else + t5 = operands[2]; + emit_insn (gen_muldi3 (t3, t4, t5)); + + emit_move_insn (operands[0], gen_lowpart (SImode, t3)); + emit_insn (gen_extend_insn (t6, operands[0], DImode, SImode, 0)); + + riscv_expand_conditional_branch (operands[3], NE, t6, t3); + } + else + { + rtx hp = gen_reg_rtx (<MODE>mode); + rtx lp = gen_reg_rtx (<MODE>mode); + + emit_insn (gen_mul<mode>3_highpart (hp, operands[1], operands[2])); + emit_insn (gen_mul<mode>3 (operands[0], operands[1], operands[2])); + emit_insn (gen_ashr<mode>3 (lp, operands[0], + GEN_INT (BITS_PER_WORD - 1))); + + riscv_expand_conditional_branch (operands[3], NE, hp, lp); + } + + DONE; +}) + +(define_expand "umulv<mode>4" + [(set (match_operand:GPR 0 "register_operand" "=r") + (mult:GPR (match_operand:GPR 1 "register_operand" " r") + (match_operand:GPR 2 "register_operand" " r"))) + (label_ref (match_operand 3 "" ""))] + "TARGET_MUL" +{ + if (TARGET_64BIT && <MODE>mode == SImode) + { + rtx t3 = gen_reg_rtx (DImode); + rtx t4 = gen_reg_rtx (DImode); + rtx t5 = gen_reg_rtx (DImode); + rtx t6 = gen_reg_rtx (DImode); + rtx t7 = gen_reg_rtx (DImode); + rtx t8 = gen_reg_rtx (DImode); + + if (GET_CODE (operands[1]) != CONST_INT) + emit_insn (gen_extend_insn (t3, operands[1], DImode, SImode, 0)); + else + t3 = operands[1]; + if (GET_CODE (operands[2]) != CONST_INT) + emit_insn (gen_extend_insn (t4, operands[2], DImode, SImode, 0)); + else + t4 = operands[2]; + + emit_insn (gen_ashldi3 (t5, t3, GEN_INT (32))); + emit_insn (gen_ashldi3 (t6, t4, GEN_INT (32))); + emit_insn (gen_umuldi3_highpart (t7, t5, t6)); + emit_move_insn (operands[0], gen_lowpart (SImode, t7)); + emit_insn (gen_lshrdi3 (t8, t7, GEN_INT (32))); + + riscv_expand_conditional_branch (operands[3], NE, t8, const0_rtx); + } + else + { + rtx hp = gen_reg_rtx (<MODE>mode); + + emit_insn (gen_umul<mode>3_highpart (hp, operands[1], operands[2])); + emit_insn (gen_mul<mode>3 (operands[0], operands[1], operands[2])); + + riscv_expand_conditional_branch (operands[3], NE, hp, const0_rtx); + } + + DONE; +}) + (define_insn "*mulsi3_extended" [(set (match_operand:DI 0 "register_operand" "=r") (sign_extend:DI -- 2.27.0 {"mode":"full","isActive":false}

Special Thanks to:

- Jim Wilson, for the throughout help and advice on gcc and gdb.

- Craig Topper, for pointing out the SImode operand needs sext.w for unsigned add/sub in RV64.

- Andrew Waterman. For better SImode signed add/sub and unsigned mul pattern in RV64.

- Kito Cheng, for patch submission.